OUT OF YOUR HEAD

Continuing with the topic of the process of rendering music, I would like to focus on a very important aspect of recorded audio, spatialization and virtual acoustic environments. One of the amazing things about our ears and brain is their ability to localize sound sources in a three-dimensional sound field. I consider this topic the final frontier of recorded sound.

I was first introduced to binaural recorded sound at the Audio Engineering Convention (AES) in Anaheim, California in 1987. It was a blindfolded demonstration on headphones of an audio system called Holophonics by Hugo Zuccarelli. He was using a dummy head, which recreated sound sources localized outside the headphone and placed 360 degrees around the listener.

That demonstration forever changed my view on how recorded audio should be displayed and experienced by the listener. This experience began a 25-year journey in researching, developing, creating and producing virtual acoustic environments. Paralleling this audio path was also my interest in advanced virtual reality technologies and applications. I am fairly certain I was the originator of the phrase “Virtual Audio” having coined this term in 1989. Here is my definition created at that time.

- Virtual: 1. being something in effect, though not so in name; for all practical purposes; actual; real.

- Audio: 1. of hearing; 2. of sound.

- Virtual Audio: 1. sound that is specially processed to contain significant psycho-acoustic information to alter human perception into believing that the sound is actually occurring in the physical reality of three-dimensional space.

My definition was later included in a paper called “Auditory Interfaces” for the Human Interface Technology Laboratory (HITLab) which you can read here: ftp://www.hitl.washington.edu/pub/scivw/publications/IDA-pdf/AUDIT.PDF

My interest in binaural sound centered around the fact that I wanted to make my recordings as real as possible. After all, what greater reference do we have than our own perceptions? In my past work, I was only using stereo and it clearly was not how we hear in everyday life. Also at that time, I was pursuing higher quality recording technology as well. I found out later that spatial audio technology and high definition audio go hand in hand.

In the late 70’s, I was experimenting with digital recording with the Sony PCM-F1. But this eventually led to R-DAT recorders in the late 80’s. I was basically using DAT recording technology for most of my early Virtual Audio recordings. I found right away that in the case of Virtual Audio, the higher sampling rate of 48KHz on the DAT recorder was superior to recordings in 44.1 KHz. There was clearly more air and the localization of the sounds was much better. Also, the lower noise floor of digital gave better realism, not having to hear tape hiss. It was around this time, I postulated that to totally duplicate reality in audio, 32-bit conversion at 384 KHz was needed. This was primarily based on the Nyquist Sampling Theorem and the artifacts created by brick wall anti-aliasing filters. I thought, if we sampled high enough, anti-aliasing filters could be eliminated entirely.

My early experiments in binaural recording were with various dummy heads. Some were better than others in their ability to accurately reproduce an acoustic event. Many important details became clear as I tried to implement dummy head recording into my record productions.

- One big problem revealed itself very early. It was a problem of comparability between speakers and headphones.

- Another was front to back location discrepancies.

- Still another was directional localization tended to vary depending on each individual’s unique set of ears.

- I also discovered in record production and mixing, once a dummy head recording was made, the directional information was fixed and could not be changed after the fact.

- Ambience and reverb tended to render differently on headphones and speakers.

- Processing and equalization after the fact of a binaural recording usually destroyed the spatialization in some way.

I was determined to attack each one of these problems and find solutions. This led me on a path to meeting and working with many scientists and researchers in this field from all over the world.

The incompatibility between headphones and speakers is a complex issue. Speakers need to have a very flat in frequency response and be phase coherent. That is a difficult task in itself. But also, in real life, sounds basically originate at a point source. In other words, a specific spot in space. Speakers by design tend to spread out or smear a sound to give an illusion of spatial quality. This is diametrically opposed to a point source speaker optimized for Virtual Audio. Also, a speaker that does come close to being a point source is not musically interesting to listen to unless the source audio is rendered in Virtual Audio.

There is also a fundamental difference in the approach of speaker technology, which still exists today. The concept of the audio chain begins from a microphone(s), a source (audio player), DAC, amplifier, and ends with the acoustic output of the speaker. When conceptualizing sound as Virtual Audio, the beginning of the chain requires encoding of spatial attributes, usually a dummy head or a head model (I will discuss that more at length later), and the end of the chain ends at your ears. The main difference is the latter method, which totally eliminates the ambience, delays and reverberant field of the listening room. All the acoustics are already encoded in the Virtual Audio recording. One would otherwise be listing to a room within a room. Probably not such a good idea!

Another problem with speakers is crosstalk. Virtual Audio encoded sound is designed to be presented discreetly to each ear. With speakers, we have the audio in the right speaker bleeding into the left ear of the listener and vise versa. This causes cancellation of important frequency and time characteristics and as a result, the spatial image is radically compromised. Another similar problem is caused by the audio reflections bouncing around the room and getting into both ears. Again, this causes cancellation of important frequency and time characteristics that result in greatly compromising the spatial effect.

The concept of headphones eliminates all the problems of speakers I mentioned above. My initial Virtual Audio research was primarily based around headphones. I became quite the listener of many brands of headphones trying to find what I considered the best for rendering a Virtual Audio spatial experience. In 1988, my search concluded that Sennheiser headphones were well suited for the task. They are still quite good today, culminating with Sennheiser’s HD-800 headphone. But I still was not getting the realism that I wanted. So my search ended in 1991 when I discovered the Stax Lambda Pro series electrostatic headphones. As my research reference through the 90’s, I used the Stax Lambda Pro with a specially designed amplifier that was equalized properly for the headphone, the Fletcher-Munson curve and human hearing. 256 filters for both right and left ears accomplished this.

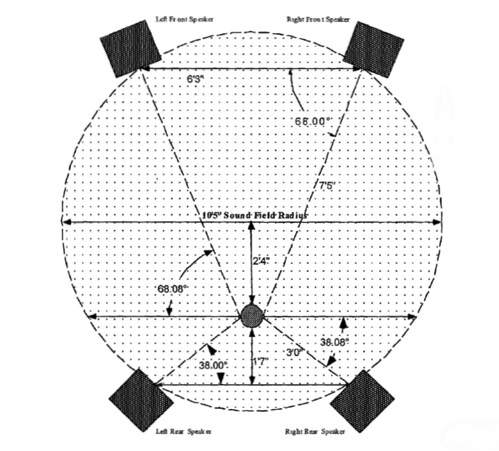

Also in 1991, I began experimenting using speakers. I found the best speaker for my research was the Meyer HD-1 near field monitor. These monitors are free field equalized, which perfectly matched the equalization of the dummy head I was using. I used four HD-1s. They were configured two in front and two in the rear. The source was a Virtual Audio recording (two channel); the source in the rear left and right speakers was the same as the front left and right speakers. This setup using the four HD-1s rendered like a headphone. In some ways, it even sounded better than headphones.

The problem that I have never been able to solve to my satisfaction in real world applications is the rendering of sound sources in close proximity to the head on speakers. There was always a point when the sound source would spatially distort when moved within a certain close proximity to the head. In other words, it would tend to just stay at a specific distance and just get louder rather than move intimately close to the ear. This anomaly is basically eliminated in an anechoic environment.

The problem with front and back discrepancies involved confusion when a sound was directly in front of the listener on headphones. More times than not, a listener would report the sound moving directly to the back of the head. I found that there were many possible causes for this effect. One was the type of dummy head used (head model) did not have the same type ears as the listener’s ears. Another is, in real life, one tends to move one’s head. A slight movement of the head seems to give back the cues required for correct frontal imaging. I also found that the headphone equalization was very important in this regard. A flat curve is required.

When it came to doing real world music productions, the limitations of using a dummy head became obvious. With a dummy head, the spatial aspects, once recorded, become fixed. In other words, you can no longer move a specific sound to another location. When mixing, it quite often becomes necessary to move the sounds to a different location. This is a severe limitation. If one is just recording a live event in stereo in a “you are there” type concept, the dummy head can be an excellent choice. But in multi-track recording using the dummy head becomes less than ideal. For example, let’s say you use the dummy head to do overdub recordings. As you add each stereo pair, the room ambience builds up very quickly, which then becomes totally un-natural. Then we have the problem of how to record things like synthesizers or any instrument that normally runs direct into the recorder. If one wants to record a synthesizer with a dummy head, the engineer has to run the synthesizer through speakers. Also the room ambience becomes a factor. One quickly sees the limitation of this approach.

The answer to this problem is using a computer to synthesize the dummy head and to create Virtual Audio reverberation. Using a computer, one can create any kind of 3D ambience, move the sounds to any location after the fact…or actually move a sound around in virtual space using automation. One can build as many tracks as the computer will allow in realtime. The real exciting aspect of using the computer to create Virtual Audio is one is no longer anchored to the concept of the “real” world. We can create listener viewpoints that in the past were impossible.

Greatly motivated by this concept, I went to work and in 1991, I assembled the first version of the Virtual Audio Processing System (VAPS).

Next month, I will discuss the VAPS in greater detail.

See you next month here at the Event Horizon!

You can check out my various activities at these links:

http://transformation.ishwish.net

http://currelleffect.ishwish.net

http://www.audiocybernetics.com

http://ishwish.blog131.fc2.com

http://magnatune.com/artists/ishwish

Or…just type my name Christopher Currell into your browser.

Current Headphone System: Woo Audio WES amplifier with all available options, Headamp Blue Hawaii with ALPS RK50, two Stax SR-009 electrostatic headphones, Antelope Audio Zodiac+mastering DAC with Voltikus PSU, PS Audio PerfectWave P3 Power Plant. Also Wireworld USB cable and custom audio cable by Woo Audio. MacMini audio server with iPad wireless interface.

Want to join discussion?

Feel free to contribute!